In robotics, the dance of problem-solving takes on a rhythm akin to a complex symphony, where each instrument must perform in perfect harmony. This past year, I had the privilege of orchestrating a project that sought to interpret acoustics using a robotic system equipped with hydrophones. Our goal was simple: develop a method for accurately determining the location of sound signals underwater, which has profound implications for marine research and robotics.

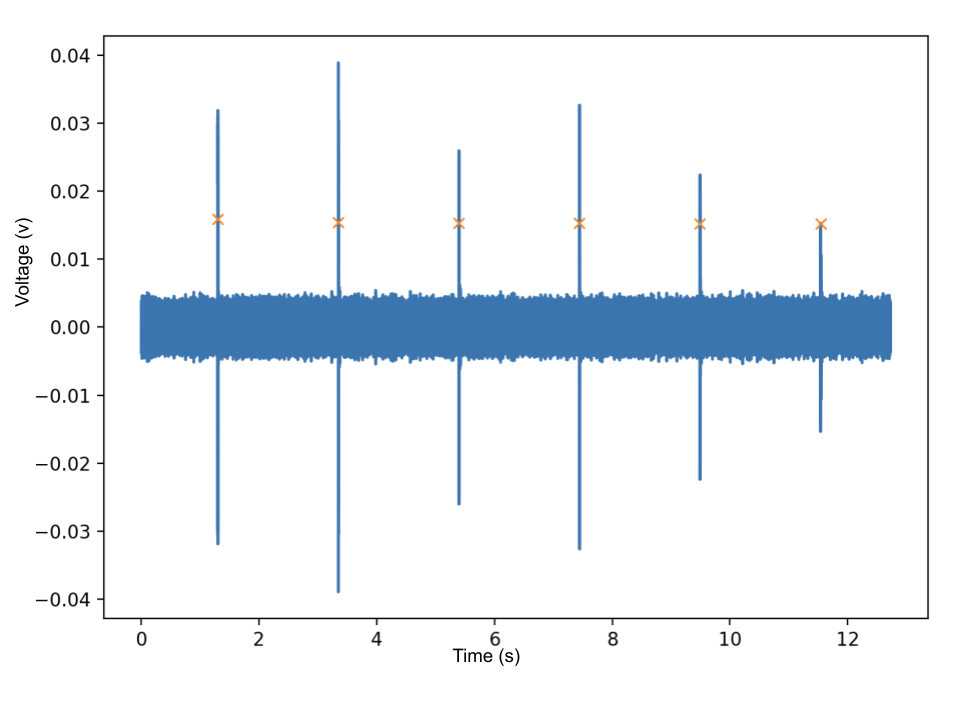

Initially, our team designed a triangular arrangement of three hydrophones. We mounted these hydrophones on the robot’s periphery. This configuration was based on the hypothesis that the speed of signal reception across the hydrophones could pinpoint the sound’s octant of origin. Every two seconds, like the beat of a drum, our system received pings from an underwater pinger, the source of our signals. These pings necessitated both hardware and software filters to enhance the clarity of the received data. After filtering, the signal’s sine wave showcased useful clarity.

However, we quickly found the music to be out of tune. During the first round of testing we found a problem. The acoustic signals, when interacting with the robot’s structure, suffered from impedance. This impedance disrupted the expected sequence of ping receptions that our initial setup relied on. This inconsistency led to unreliable predictions from the neural network we designed to interpret the hydrophone data.

Then we attempted a new song. Our breakthrough came from a shift in perspective and configuration. Recognizing that the sides of the robot itself posed a barrier to signal integrity, we repositioned our hydrophones into a square formation at the robot’s stern, just below its frame. This strategic placement, with merely two inches separating each hydrophone, minimized the structural interference. The proximity of the hydrophones to each other created a tightly knit grid that could capture the pings with enhanced precision and significantly less noise.

By reducing the distance sound had to travel to each sensor, and ensuring minimal obstruction from the robot’s own body, we transformed our system’s capability to detect and analyze underwater acoustics. Our revised system displayed a marked improvement in the consistency and reliability of the data captured. As well, the use of 4 hydrophones vs 3 led us to abandon a deep learning prediction approach, and instead arrive at a first signal received quadrant approach.

Although a song may begin off tune and off beat, it can still end in harmony.