As we’re in the final weeks of the spring 2025 semester, the CS team is hard at work preparing our robots for the RoboSub 2025 tasks. While a significant portion of our work takes place at the pool (check out our pool test updates), we’ve also been making progress on a few other important projects.

Fiber Optic Gyroscope

At RoboSub 2024, the most significant issue we encountered was the significant drift in our IMU’s yaw readings, exceeding 10º per minute. This threw off the robot’s state estimation and caused it to unpredictably rotate. To address this issue, we invested in a single-axis fiber optic gyroscope, which provides extremely accurate angular velocity readings.

Integrating the gyro was challenging. The gyro communicates using the RS-422 protocol – a first for our team – and requires a 1000Hz square wave trigger signal.

Our initial approach relied exclusively on PySerial to trigger the gyro and parse the received data. During testing, however, we noticed that the gyro was producing data at approximately 950Hz instead of 1000Hz, the data wasn’t smooth, and it frequently had checksum errors. After consulting the manufacturer, we learned that for the most optimal results, the trigger signal should be generated directly through hardware rather than software. They recommended using an FPGA or microcontroller to accomplish this.

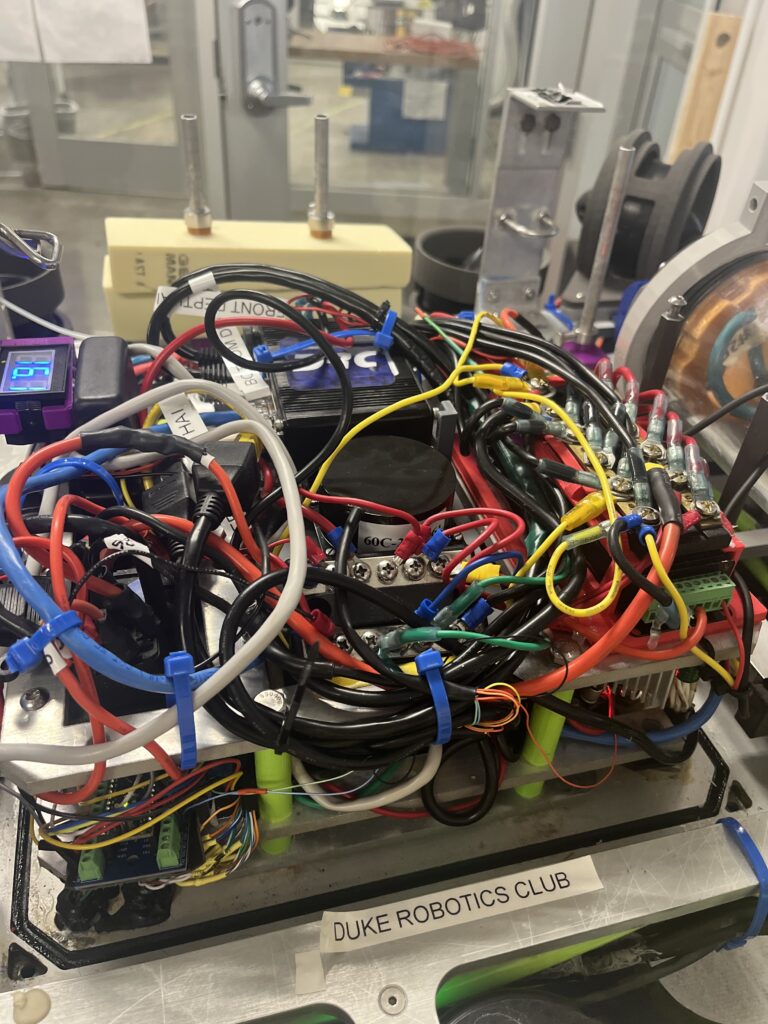

To accommodate the new gyroscope, the mechanical team redesigned the top mount on Oogway’s electrical stack, shifting the Ethernet switch and IMU to make space. Once the gyro was securely mounted and connected to Oogway’s computer, we focused on generating the hardware-based trigger signal.

The RS-422 protocol requires differential signaling, meaning two complementary square waves (one high while the other is low, and vice versa). We used the Timer2 hardware timer on Oogway’s peripheral Arduino to generate the pair of waves. This setup successfully triggered the gyro, allowing us to receive data at precisely 1000Hz.

We integrated the gyro’s angular velocity readings, along with the IMU, DVL, and pressure sensor data, into sensor fusion. Our pool tests on March 22 and 23 showed that this eliminated the short-term yaw drift issue from RoboSub 2024, and also limited our long-term yaw drift to only 2-3 degrees, which is the most accurate state we’ve ever had.

Encouraged by these results, we will purchase a second fiber optic gyroscope to integrate on Crush.

Torpedo CV Model

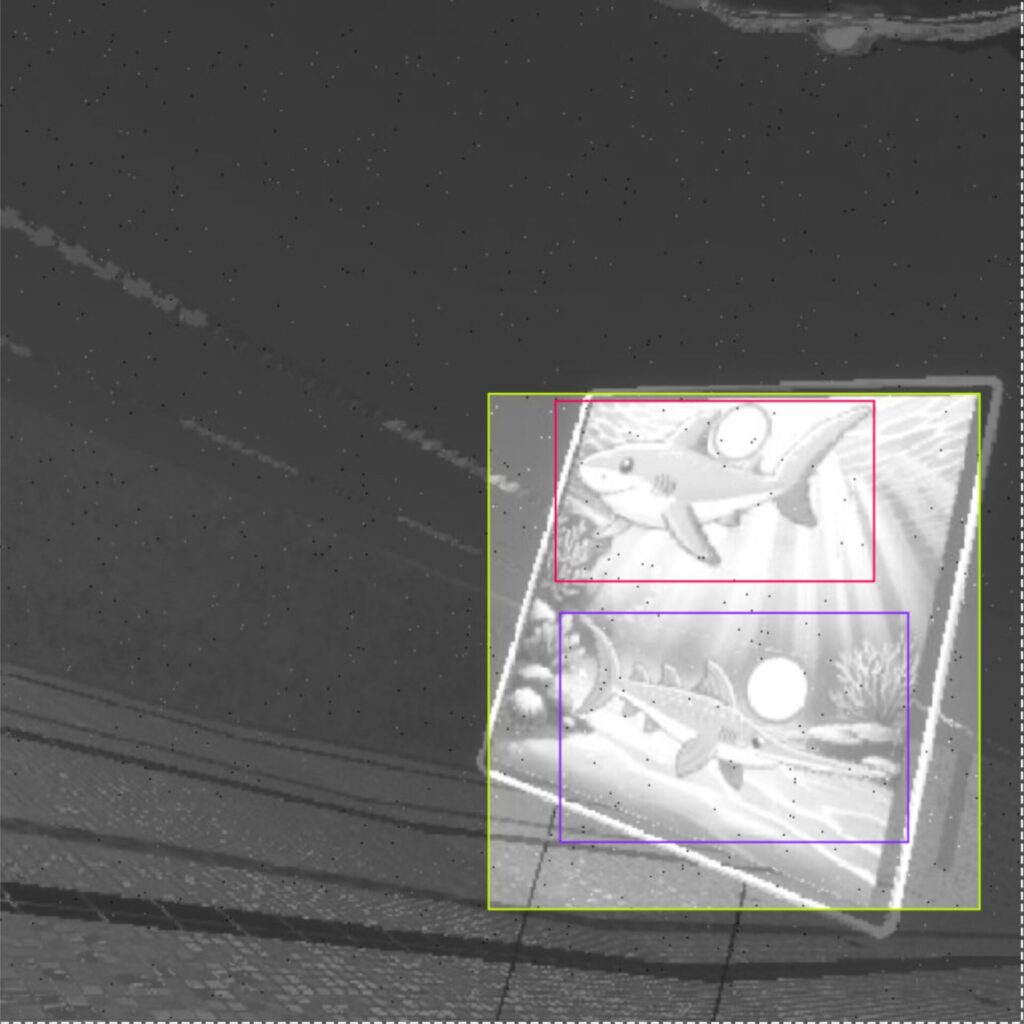

The CV team has trained our first deep learning model of the year – torpedoes – which is key to completed the torpedoes task. Following the success of last year’s synthetic training image generation, we decided to go synthetic again. Thanks to the ability to automatically generate bounding boxes in Unity, we are detecting three things: first, the entire torpedo banner; second, the shark and associated torpedo target; and third, the swordfish and associated torpedo target. We generated 10,000 images from various different locations and camera angles in our Unity environment of the Woollett Aquatics Center, and once again used augmented our images with the following modifications. Our dataset is publicly available on Roboflow.

- x7 training images per Unity-generated image (70,000 total images)

- Up to +/- 25deg hue

- Up to +/- 33% saturation

- Up to +/- 25% brightness

- Up to +/- 10% exposure

- Up to 0.3px blur

- Up to 1.05% of pixels noise

In an effort to remove any potential color bias, we once again trained the images on grayscale. With 1200 and 600 of our 70,000 images set to validating and testing, respectively, we had a healthy-sized train set. After around 10 hours of training, the model looks so far so good on land, and we look forward to evaluating its performance underwater next weekend. We will be watching for any color issues, especially in the underwater environment.