2023-Present: Oogway

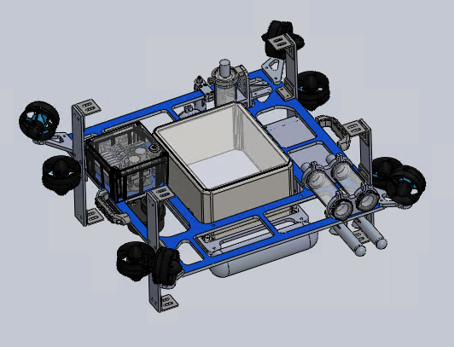

Oogway marks one of the largest design overhauls in club history.

Apart from a completely new layout, Oogway sports all-new CV software, newly added sonar integration, upgraded cameras, and more!

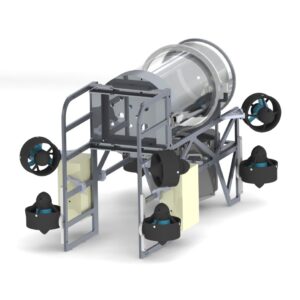

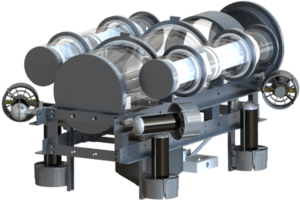

Mechanical

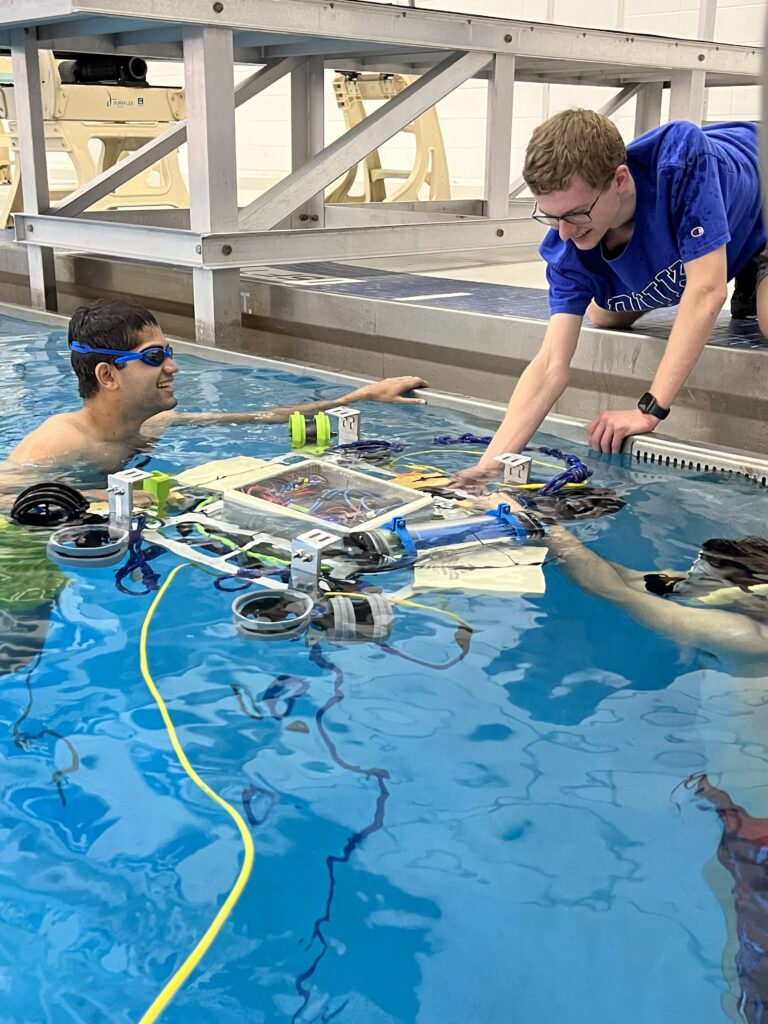

Oogway is also designed with adaptability in mind. The frame has mounting rails built directly into it, offering enhanced modularity. Every component can be repositioned easily, allowing us to optimize for factors such as buoyancy.

One of the most notable changes is the symmetrical layout of the entire robot. This combined with the increased torque from the radially positioned thrusters enables greater maneuverability when spinning.

There is a water-tight capsule at the core of the robot which houses all the electronics. We modified the capsule to have a transparent lid, which allows for visual monitoring. The battery, situated in a separate compartment, can be easily replaced when needed.

Electrical

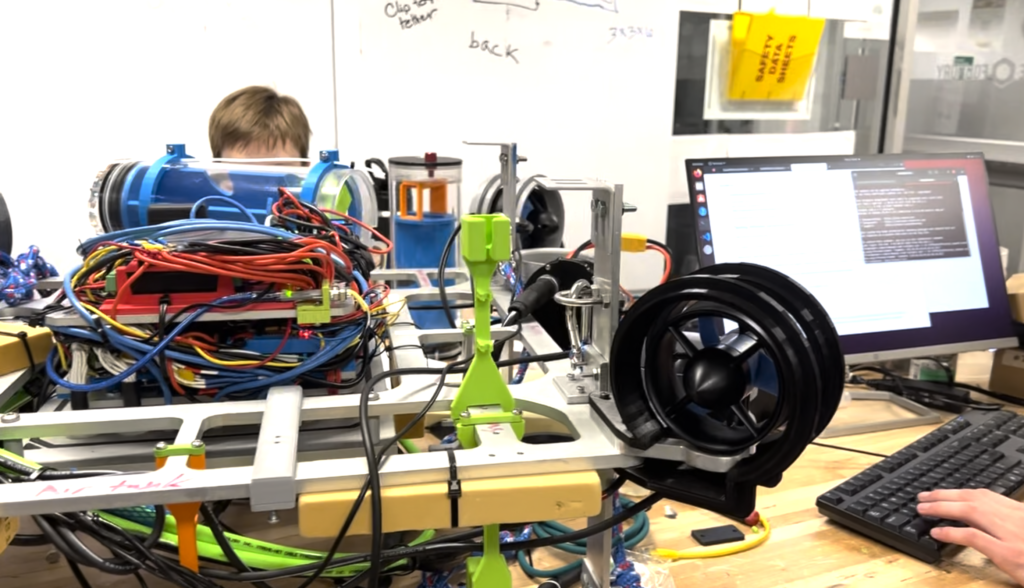

The electrical stack sits tightly at the core of the robot. Additions this year include a POE-enabled camera, sonar data and power, and software-enabled disabling of the camera power system.

The stack houses an Asus miniPC, with CV processing done on a dedicated, in-camera computer. Also in the stack can be found a POE switch and a bus bar for power distribution. Sensory components and ESCs attach to an I2C multiplexor connected to an onboard Arduino.

The two most important sensors are the IMU and the DVL; the former can be found in the stack at the center of mass of the robot and the latter mounted to the frame.

Other important components include DC-DC converters, replaceable fuses, and the entire Acoustics stack.

Waterproofing of electrical components is done through a combination of waterproof heat shrink and epoxy casings.

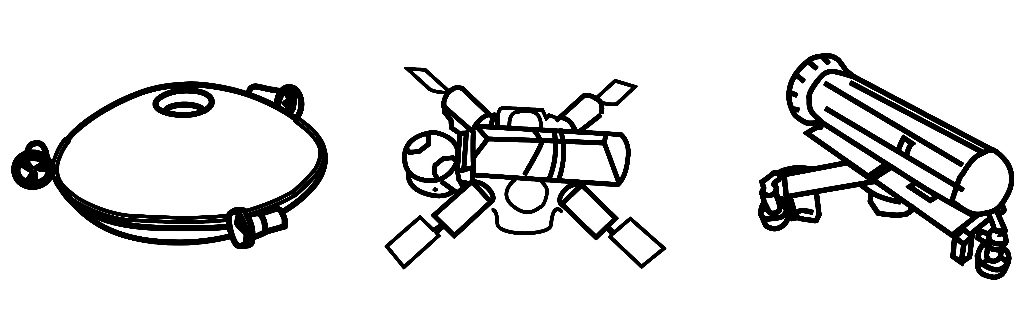

Acoustics

The acoustics system has had various improvements, with both electrical and software advances.

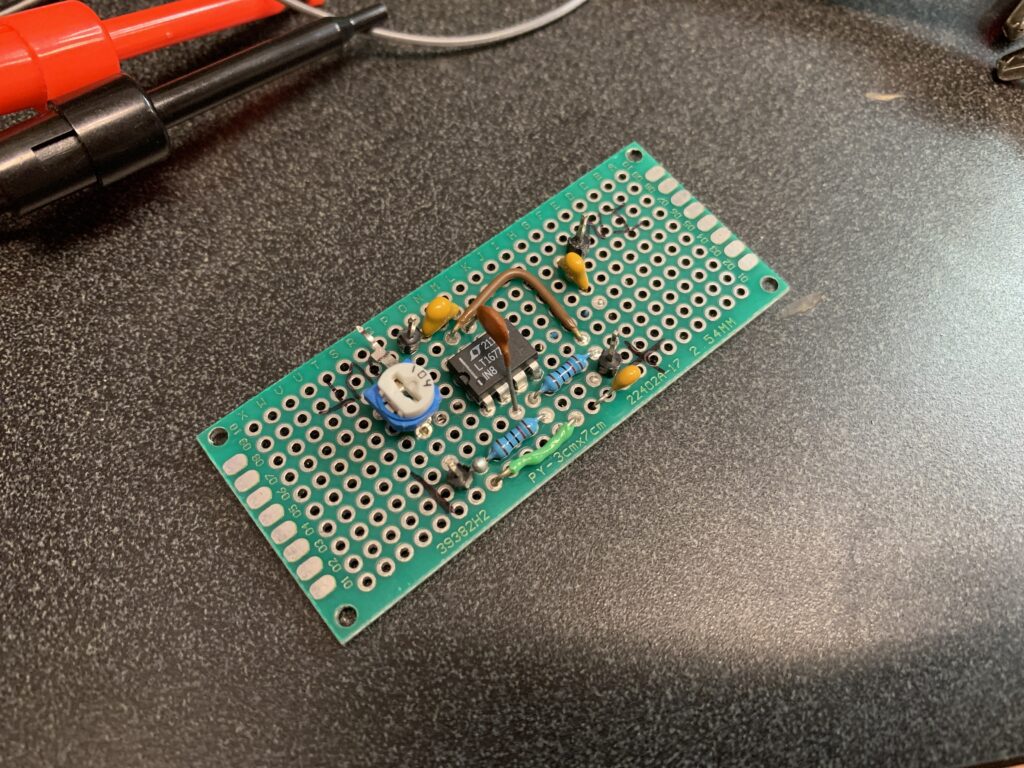

The amplification characteristics were tuned through the use of a custom single channel, yet continuously variable, amplifier.

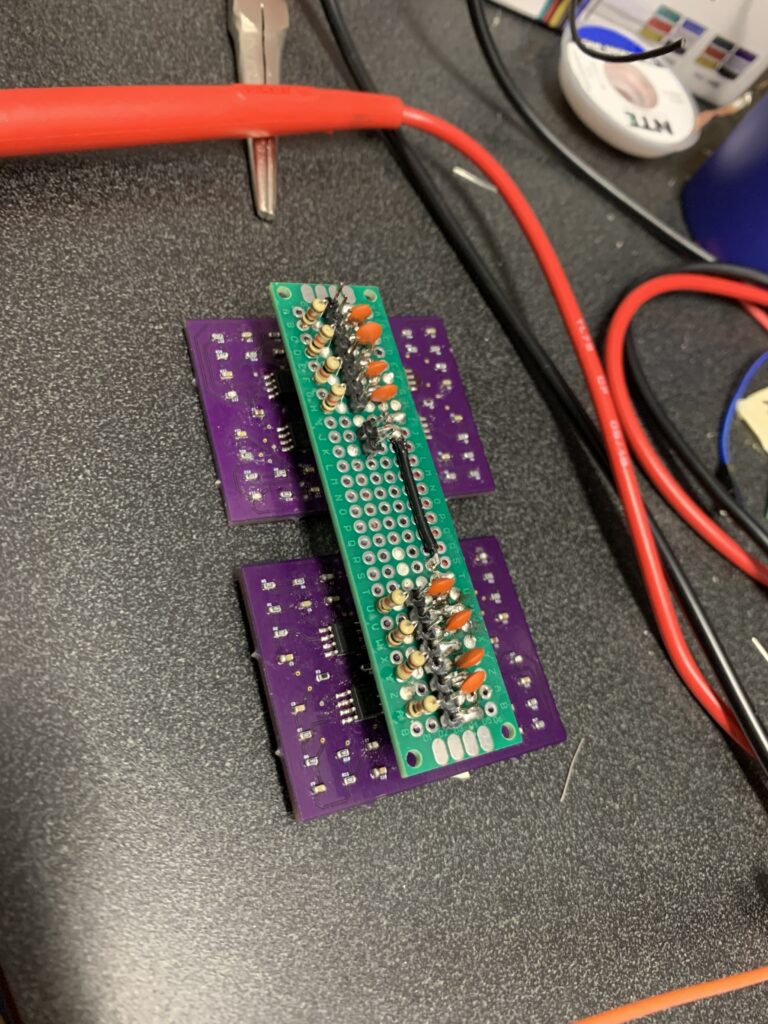

In the frequency domain, the existing LPF was upgraded to a more desirable bandpass design. This was done without modifying the existing SMT boards. A backpack was developed that could attach directly to the boards.

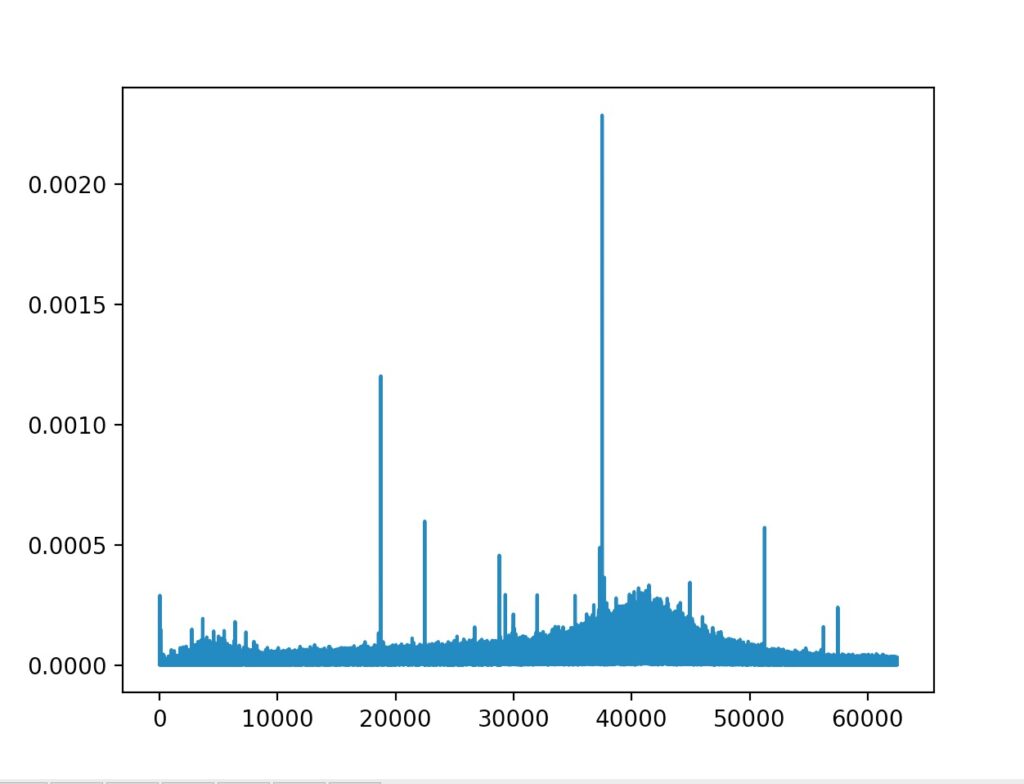

The effects of these changes were noticeable. In a frequency-domain plot, the shape of the bandpass is clearly visible on the Bode plot. In this representation, the ~38kHz ping is easily recognizable, even from across the pool.

Further developments are aimed at reducing reflection-based aliasing, increased software filtering, and improved SNR. The acoustics algorithm produces accurate results with simulated noisy data, but does not fully account for these factors.

The algorithm is based on a 4-dimensional TDoA approach solved by gradient descent, optimized by a computationally cheap TOA guessing server.

A software Butterworth filter sets up a correlation to determine relative phase differences between channels. The algorithm chooses a window with relatively small variance in phase differences. By taking pairwise comparisons with the 4 hydrophones and with Euclidean geometry, the algorithm can set up the gradient descent problem.

The robot has eight dedicated hydrophones. Four hydrophones are located within 1/2 wavelength of each other to prevent aliasing and are used for setting up the gradient descent computation. The other four are dedicated to providing a guess aimed at octant-level accuracy. These hydrophones are located on opposite sides of the robot and use absolute timings to determine the guessed pinger location.

Computer Vision

The close integration of a new sonar system with the overhauled CV software provides advantageous perception capabilities for Oogway.

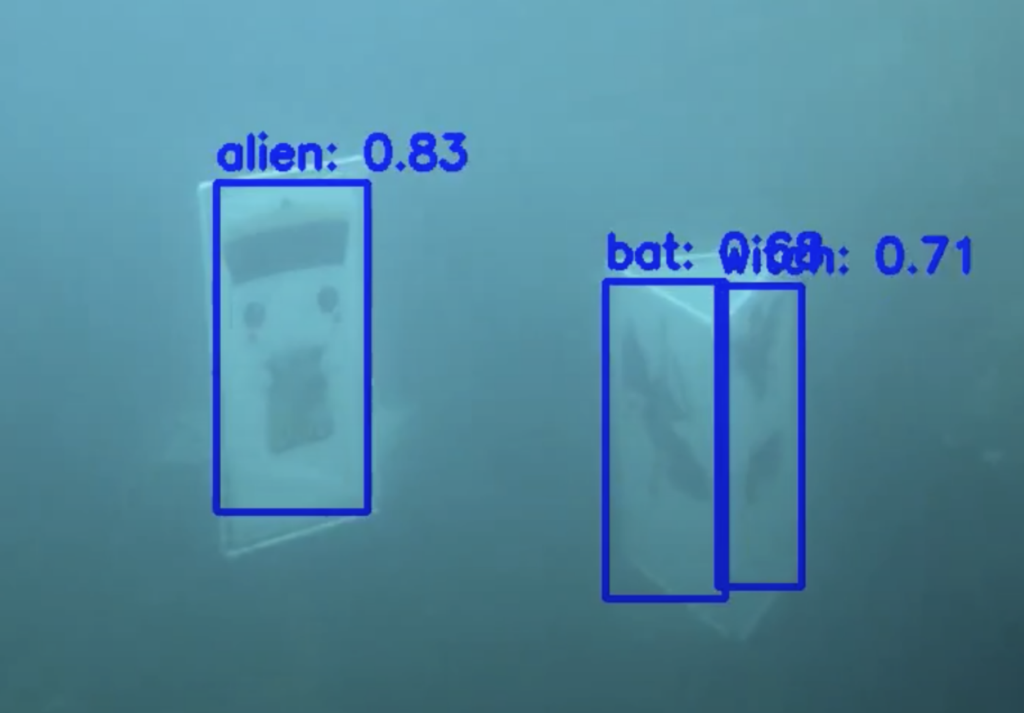

A focus this year was researching and deciding on a new CV object detection model for the robot. During last year’s competition, the team used the YOLOv4-tiny model for object detection. This year, with new models being released, the team is aiming for a higher detection rate and accuracy. After comparing the performance of different CV models on the datasets, the model was upgraded to use YOLOv7-tiny. Experimentally, YOLOv7-tiny was found to process the most frames per second with high accuracy and a low amount of extraneous detections.

Since the glyphs this year are randomly oriented, the CV team emphasized robustness in the new model. The real images were captured in a competition-like environment with different glyph orientations and camera angles.

In addition to real images, the team also generated an equal amount of synthetic images through Unity, which allowed for quick picture generation with varying orientations of the glyphs, varying positions of the camera (with respect to the buoy/gate), and with the murkiness of the water in the TRANSDEC pool. This enabled the team to strategically supplement the existing model with images of angles uncommon in the dataset.

Annotations were automatically generated alongside the synthetic images, allowing the team to generate thousands of synthetic images extremely quickly.

During testing and footage collection for last year’s competition, a live feed of the robot was not available — footage had to be extracted once the robot was out of the water. This led to situations where session data was suboptimal, unbeknownst to the team.

This year, CV created a GUI that allows gives a view live feed from the underwater robot to make immediate changes as necessary. The GUI also includes different widgets that allow users to record bag footage, launch/track ROS nodes and topics, view live bounding boxes, and control/track camera status. Robot control was also added so that the team has more autonomy to collect the type of footage needed during testing sessions.

Software

This year, the structure of the existing codebase was reconsidered in a few aspects. Firstly, the existing controls modules was converted to use ROS’s Action system, rather than the more typical publish/subscribe model. This eliminates the need to publish desired states at a fixed rate, and allows the robot to only send new data to controls when a new set point is assigned. This avoids the need for continual publishing.

Migrating to ROS Action ties closely with another large change to the task planning framework: SMACH. In the updated design, every state is designed to be nonblocking. This means that if a state needs to do complete a task (such as moving the robot) over a period of time, it will return one result repeatedly. Once it achieves the desired goal, it will return a different result, indicating that the program should move on to the next state.

This approach is helpful in that it allows additional states to be inserted between each “cycle” of a longer running state. One common use of this technique is when moving the robot to a target, while regularly refreshing camera data to determine how close the robot is to the target. Previously this was done with concurrent states that utilized different threads of execution, which before this optimization, was unideal and complicated.

Our GitHub repo for all robot code: https://github.com/DukeRobotics/robosub-ros

See our full technical report: https://drive.google.com/file/d/17dcy04170U7iFT0FrDMe-y51gfubipnv/view?usp=sharing

2020-2022: Cthulhu V2

2022

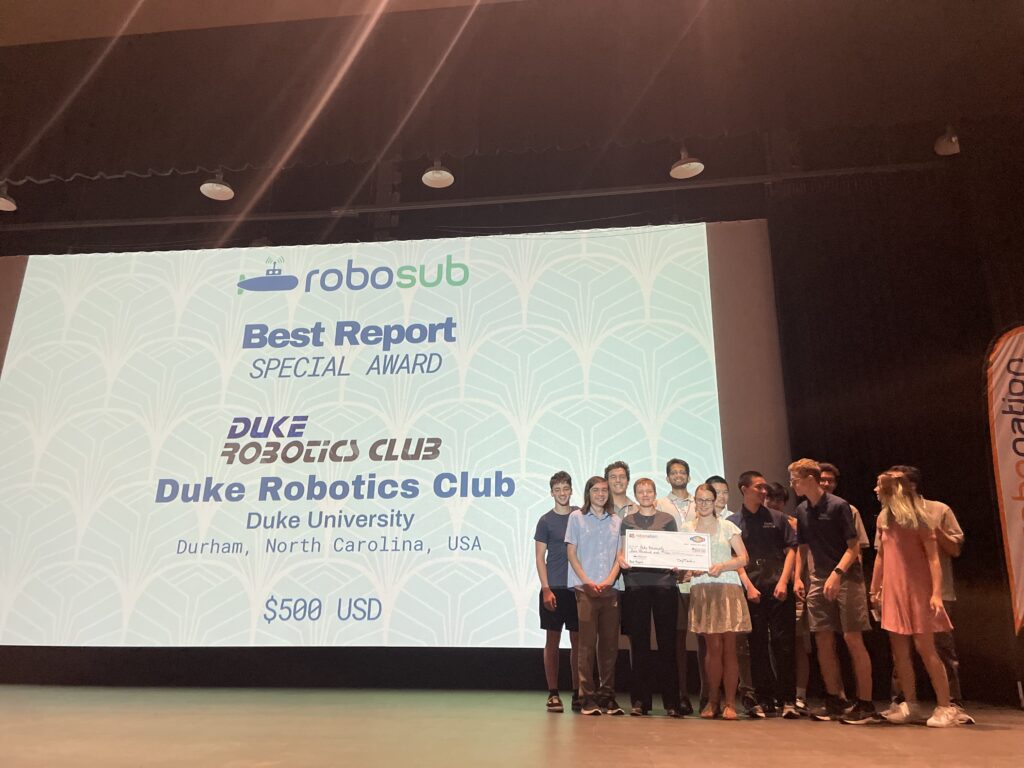

We received an award for best journal paper and placed 11th out of 54 teams! Our 2022 RoboSub submission features a redesigned legacy robot, Cthulhu.

2021

We ranked 1st place journal paper, 1st place Propulsion System video, and 3rd place Sensor Optimization video out of 54 teams! Our 2021 RoboSub submission features a newly designed robot, Cthulhu v2. Built around the core tenets of flexibility and adaptability, Cthulhu v2 offers more reliability than our previous robots when performing fundamental and complex tasks. For more information on the development of Cthulhu v2, see our RoboSub 2021 page. Also, check out the recap here on our blog.